This is the first in a series of posts I plan to make about technical concepts I’m learning – Tech Bites. Explaining them will hopefully solidify my understanding. I plan for these to be relatively short, this one is definitely an outlier because it’s fairly bulky.

Encoding

Software is useful because it interacts with other code and computers. At some point this means communication across a network.

Unfortunately the data we use in code can’t simply be sent across the internet. Often it needs some conversion into a different format. This conversion is called encoding.

Binary Encoding

In order for data to make it’s way across the internet, at some point it needs to become binary. That is, become 1s and 0s.

For this example, lets use Node.js. The Node.js Buffer object allows us to represent data as bytes. A byte is 8 bits. A bit is a 1 or 0. Therefore a Buffer lets us store binary data!

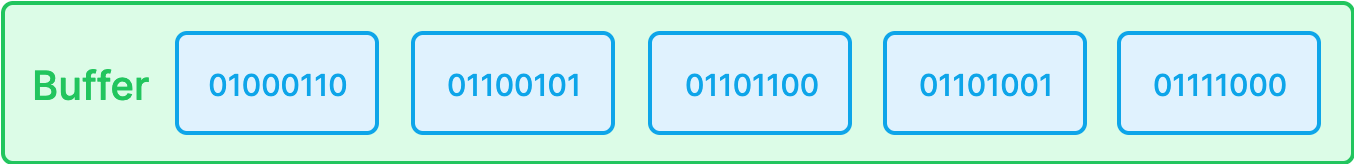

This diagram shows what a Buffer might look like:

We can convert the string Felix to the raw byte representation of the same string using a Buffer. Unfortunately it’s a little tedious to view the raw binary bytes from a Buffer, so lets assume bufferToBinary() takes care of that for us.

// Let's create a Buffer (byte array) from the string 'Felix'

myBuffer = Buffer.from("Felix");

// Let's have a look at what the raw byte sequence looks like

bufferToBinary(myBuffer); // 01000110 01100101 01101100 01101001 01111000

Looks like we have 5 bytes, which matches the 5 characters we provided. Nice!

UTF-8

There was a magic step in the conversion though. How the hell did we know that F => 01000110 and e => 01100101 etc.? Let’s say hello to our good friend UTF-8, also known as Unicode Transformation Format – 8-bit. It’s a character encoding standard.

UTF8 takes Unicode characters (numbers, letters, symbols, letters with accents and even emojis) and produces bytes that represent the same characters.

How did Node.js know which encoding format to use? UTF-8 is a widely adopted standard, so Node.js uses it by default. The second argument for Buffer.from() is the encoding we should use. It defaults to UTF-8 so we don’t need to provide it explicitly.

Buffer.from("Felix");

// is the same as

Buffer.from("Felix", "utf8");

These are both saying, take this string I’m providing you, create a binary representation of it using the UTF-8 encoding standard and store that in a Buffer.

You can see a list of the Unicode mappings to a base 10 (decimal) and base 2 (binary) representation on the Wikipedia page. The conversion for our example is shown below.

# Char Decimal Binary

F => 70 => 01000110

e => 101 => 01100101

l => 108 => 01101100

i => 105 => 01101001

x => 120 => 01111000

What happens when we do try to print our Buffer? What does it show if it can’t easily produce a binary representation? It turns out that you can see the decimal (base 10) and hexadecimal (base 16) representations. Base 16 is useful because it’s very easy to convert between base 16 and base 2 (binary) and it’s much more human readable.

// .toJSON() gives us decimal (base 10)

console.log(myBuffer.toJSON()); // { type: 'Buffer', data: [ 70, 101, 108, 105, 120 ] }

// Logging gives us hexadecimal (base 16)

console.log(myBuffer); // <Buffer 46 65 6c 69 78>

Unicode Code Points

You might have seen Unicode Code Points around before. They look like U+0046 and U+0065. The U+ part is just saying this is a Unicode character and everything after that represents a character but in hexadecimal! This means we can expand our conversion table as follows.

# Char Code Point Hex Decimal Binary

F => U+0046 => 46 => 70 => 01000110

e => U+0065 => 65 => 101 => 01100101

l => U+006C => 6c => 108 => 01100101

i => U+0069 => 69 => 105 => 01000110

x => U+0078 => 78 => 120 => 01100101

Have you ever seen this symbol before �. If for whatever reason a byte sequence can’t be converted into a Unicode character using UTF-8, this character will rear it’s ugly head. Unicode uses 1-4 bytes to encode characters. This allows for a heap of characters to be encoded but also means we need to know when we’re expecting only one, two, three or four bytes per character. UTF-8 uses the eighth bit to signal whether further bytes are needed to encode the character.

We can exploit this feature to generate our own �. When a character is UTF-8 encoded and is going to be more than one byte, it has a 1 at the start to signal keep processing bytes after me. This means it looks like 1xxxxxxx. If we provide a single byte with a one at the front and then try to decode it using UTF-8 it will be looking for another byte and won’t be able to find it, therefore it’ll produce a �.

// Generate a buffer of a single byte containing the decimal value 243

// Then decode it using UTF-8 to produce a string

Buffer.from([243]).toString('utf8); // '�'

Notice I’ve used the decimal representation 243. This corresponds to 11110011. But anything fitting the pattern 1xxxxxxx will ‘work’. In decimal this is anything between 128-255.

Binary to Text Encoding

As we just discussed, one way to encode data is to go from characters into bytes. Transporting raw bytes around the internet can have it’s issues though. Sometimes these bytes can be misinterpreted and can lead to corrupt data. A simply way to avoid this is to encode data using only the most basic characters (letters, numbers and some basic symbols). This increased compatibility does come at the cost of being less concise.

Because we’re going the opposite way here from binary data to text, this is known as binary to text encoding. A common binary to text encoding format is base64.

For binary-to-text encodings, the naming convention is reversed: Converting a

Bufferinto a string is typically referred to as encoding, and converting a string into aBufferas decoding. – Node.js Documentation

Let’s have a look at how base64 encoding works in Node.js.

// Encode the string 'Felix'

myBase64EncodedString = Buffer.from("Felix", "utf8").toString("base64");

console.log(myBase64EncodedString); // 'RmVsaXg='

// Decode the base64 encoded string

myString = Buffer.from(myBase64EncodedString, "base64").toString();

console.log(myString); // 'Felix'

In the example above we first created a string representation of the characters ‘Felix’ in base64. Notice that the encoded string is longer than the original string. That is because base64 takes all the binary data in existence and encodes it into simple characters.

The second block of code decodes this same string by first creating a Buffer and then getting back the string by decoding using UTF-8. The default encoding for toString() is UTF-8, so it’s the same as toString("utf8").

It’s important to note that although it is called binary to text encoding and we end up with text at the end of the encoding process. When the data is sent across the internet, it is still being represented as bytes. It’s just that these are such simple bytes to interpret.

Kafka x AWS = MSK

So where does Kafka and AWS fit into this puzzle? Well it was the catalyst for me going down this rabbit hole in the first place.

I’m currently working on a system that has an AWS Lambda connected up to AWS’s hosted version of Kafka – MSK. Whenever a message is published to a specific Kafka topic, AWS will invoke our lambda with information about that message. At first, the data they provided came in a weird format to me. Here’s an example of what it could look like:

{

"eventSource": "aws:kafka",

"eventSourceArn": "arn:aws:kafka:sa-east-1:123456789012:cluster/vpc-2priv-2pub/751d2973-a626-431c-9d4e-d7975eb44dd7-2",

"records": {

"mytopic-0": [

{

"topic": "mytopic",

"partition": 2,

"offset": 32925,

"timestamp": 1638937502642,

"timestampType": "CREATE_TIME",

"key": "RmVsaXhIYXJ2ZXk=",

"value": "QR0CyqDkeZ17jjO+SsCS6SnRwwfBqvlhO9/KfjOl/jhWPcRODDCarp0gQLuF",

"headers": [

{

"myHeaderKey": [

109, 121, 72, 101, 97, 100, 101, 114, 86, 97, 108, 117, 101

]

}

]

}

]

}

}

You might recognise some of the formats. The key and value are both base64 encoded, while the header is a byte sequence.

The key question for me is, why does AWS provide the key and value base64 encoded, but the headers as byte sequences? Why not keep it consistent?

Well I don’t know the answer, but my guess is because of the type of data that likely to occur in each field. The key and value field can contain arbitrary binary data. Kafka literally just stores bytes. In fact, on the other end of our pipeline we provide raw binary data by serialising using Avro (this is a topic for another time, mainly because I don’t fully understand it yet). There is the possibility that because you can put raw bytes onto a Kafka topic, some funky stuff could occur. My hunch is AWS base64 encodes it just to be safe.

In terms of the headers not following the same logic. My guess here is that because headers simply contain a string, they can easily be sent as byte arrays as base64 encoding will add no benefit. The headers already contain super common characters that shouldn’t have any trouble being understood.

If you know the actual answer feel free to let me know!

Further Reading

- If you’re curious about how Node.js buffers work, have a look at the documentation: Node.js Buffer documentation.

- If you want to learn more about character encoding, read: What What Every Programmer Absolutely, Positively Needs To Know About Encodings And Character Sets To Work With Text.

- If you want to read a good explanation for why we need to use

base64, have a read of: Stack Overflow thread. - If you want to dig into MSK Events for Lambdas, have a look at this documentation and also this.

- If you want to see what else I’ve written, have a look in the archive.